3 Philosophy of statistics

Statistical analysis is very important in addressing the problem of induction. Can inductive inference be formalized? What are the caveats? Can inductive inference be automated? How does machine learning work?

All knowledge is, in final analysis, history. All sciences are, in the abstract, mathematics. All judgements are, in their rationale, statistics.

– C. R. Rao 1

1 Rao (1997), p. x.

3.1 Introduction to the foundations of statistics

3.1.1 Problem of induction

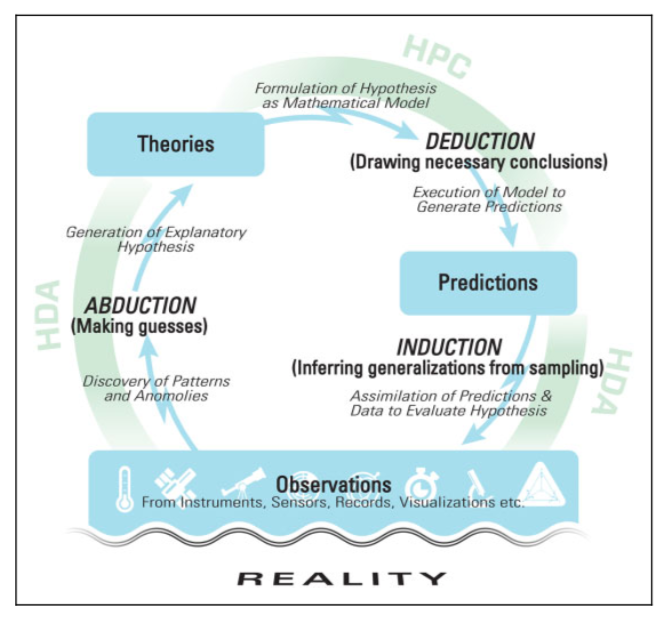

A key issue for the scientific method, as discussed in the previous outline, is the problem of induction. Inductive inferences are used in the scientific method to make generalizations from finite data. This introduces unique avenues of error not found in purely deductive inferences, like in logic and mathematics. Compared to deductive inferences, which are sound and necessarily follow if an argument is valid and all of its premises obtain, inductive inferences can be valid and probably (not certainly) sound, and therefore can still result in error in some cases because the support of the argument is ultimately probabilistic.

A skeptic may further probe if we are even justified in using the probabilities we use in inductive arguments. What is the probability the Sun will rise tomorrow? What kind of probabilities are reasonable?

In this outline, we sketch and explore how the mathematical theory of statistics has arisen to wrestle with the problem of induction, and how it equips us with careful ways of framing inductive arguments and notions of confidence in them.

See also:

3.1.2 Early investigators

- Ibn al-Haytham (c. 965-1040)

- “Ibn al-Haytham was an early proponent of the concept that a hypothesis must be supported by experiments based on confirmable procedures or mathematical evidence—an early pioneer in the scientific method five centuries before Renaissance scientists.” - Wikipedia

- Gerolamo Cardano (1501-1576)

- Book on Games of Chance (1564)

- John Graunt (1620-1674)

- Jacob Bernoulli (1655-1705)

The art of measuring, as precisely as possible, probabilities of things, with the goal that we would be able always to choose or follow in our judgments and actions that course, which will have been determined to be better, more satisfactory, safer or more advantageous. 4

4 Bernoulli, J. (1713). Ars Conjectandi, Chapter II, Part IV, defining the art of conjecture [wikiquote].

- Thomas Bayes (1701-1761)

- Pierre-Simon Laplace (1749-1827)

- The rule of succession, bayesian

- Carl Friedrich Gauss (1777-1855)

- John Stuart Mill (1806-1873)

- Francis Galton (1822-1911)

- Regression towards the mean in phenotypes

- John Venn (1834-1923)

- The Logic of Chance (1866) 5

- William Stanley Jevons (1835-1882)

3.1.3 Foundations of modern statistics

- Central limit theorem

- De Moivre-Laplace theorem (1738)

- Glivenko-Cantelli theorem (1933)

- Charles Sanders Peirce (1839-1914)

- Formulated modern statistics in “Illustrations of the Logic of Science”, a series published in Popular Science Monthly (1877-1878), and also “A Theory of Probable Inference” in Studies in Logic (1883). 8

- With a repeated measures design, introduced blinded, controlled randomized experiments (before Fisher).

- Karl Pearson (1857-1936)

- The Grammar of Science (1892)

- “On the criterion that a given system of deviations…” (1900) 9

- Proposed testing the validity of hypothesized values by evaluating the chi distance between the hypothesized and the empirically observed values via the \(p\)-value.

- With Frank Raphael Weldon, he established the journal Biometrika in 1902.

- Founded the world’s first university statistics department at University College, London in 1911.

- John Maynard Keynes (1883-1946)

- Keynes, J. M. (1921). A Treatise on Probability. 10

- Ronald Fisher (1890-1972)

- Fisher significance of the null hypothesis (\(p\)-values)

- “On the ‘probable error’ of a coefficient of correlation deduced from a small sample” 13

- Definition of likelihood

- ANOVA

- Statistical Methods for Research Workers (1925)

- The Design of Experiments (1935)

- “Statistical methods and scientific induction” 14

- The Lady Tasting Tea 15

- Jerzy Neyman (1894-1981)

- biography by Reid 16

- Neyman, J. (1955). The problem of inductive inference. 17

- Discussion: Mayo, D.G. (2014). Power taboos: Statue of Liberty, Senn, Neyman, Carnap, Severity.

- Shows that Neyman read Carnap.

- Carnap, R. (1960). Logical Foundations of Probability. 18

- Shows that Carnap read Neyman.

- TODO: Look into this more.

- Egon Pearson (1895-1980)

- Neyman-Pearson confidence intervals with fixed error probabilities (also \(p\)-values but considering two hypotheses involves two types of errors)

- Harold Jeffreys (1891-1989)

- objective (non-informative) Jeffreys priors

- Andrey Kolmogorov (1903-1987)

- C.R. Rao (1920-2023)

- Ray Solomonoff (1926-2009)

- Shun’ichi Amari (b. 1936)

- Judea Pearl (b. 1936)

3.1.4 Pedagogy

- Kendall 19

- James 20

- Cowan 21

- Cranmer 22

- Jaynes, E.T. (2003). Probability Theory: The Logic of Science. 23

- Lista: book 24, notes 25

- Cox 26

- Behnke, O., Kröninger, K., Schott, G., & Schörner-Sadenius, T. (2013). Data Analysis in High Energy Physics: A Practical Guide to Statistical Methods. 27

- Cousins 28

- Weisberg 29

- Cranmer, K. (2020). Statistics and Data Science.

- Cosma Shalizi’s notes on

- Gelman, A. & Vehtari, A. (2021). What are the most important statistical ideas of the past 50 years? 30

- Taboga, M. (2022). statlect.com.

- Otsuka, J. (2023). Thinking About Statistics: The Philosophical Foundations. 31

- Otsuka, J. (2023). Talk: What machine learning tells us about the mathematical structures of concepts.

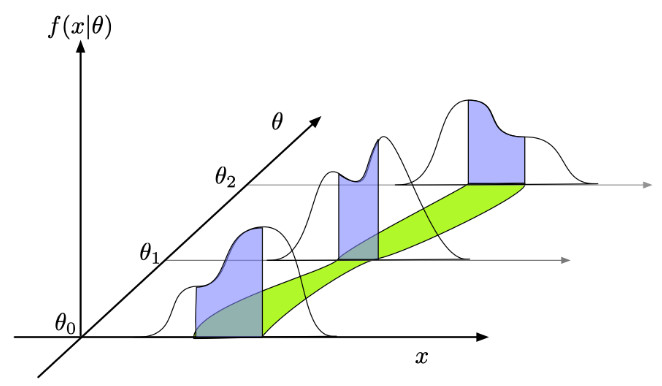

3.3 Statistical models

3.3.1 Parametric models

- Data: \(x_i\)

- Parameters: \(\theta_j\)

- Model: \(f(\vec{x} ; \vec{\theta})\)

- McCullagh, P. (2002). What is a statistical model?. 46

46 McCullagh (2002).

3.3.2 Canonical distributions

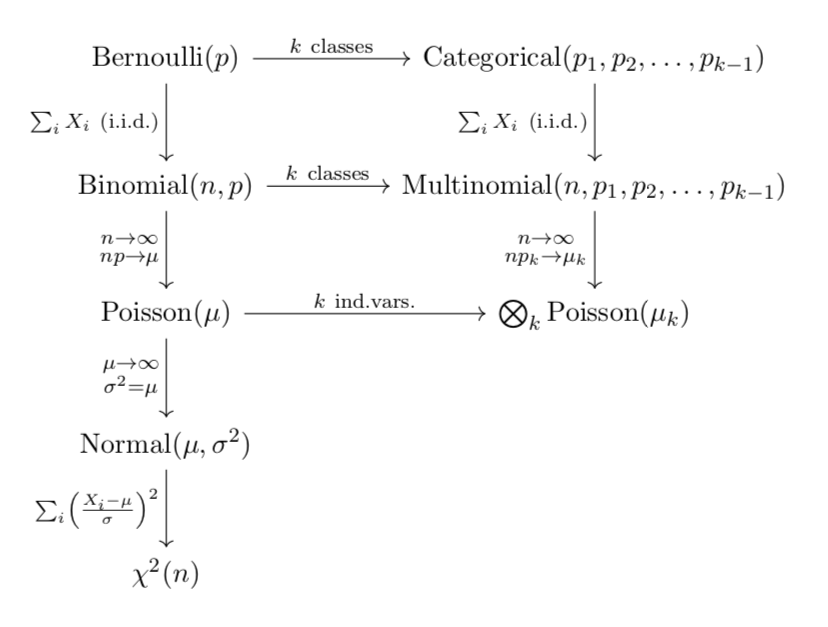

3.3.2.1 Bernoulli distribution

\[ \mathrm{Ber}(k; p) = \begin{cases} p & \mathrm{if}\ k = 1 \\ 1-p & \mathrm{if}\ k = 0 \end{cases} \]

which can also be written as

\[ \mathrm{Ber}(k; p) = p^k \: (1-p)^{(1-k)} \quad \mathrm{for}\ k \in \{0, 1\} \]

or

\[ \mathrm{Ber}(k; p) = p k + (1-p)(1-k) \quad \mathrm{for}\ k \in \{0, 1\} \]

- Binomial distribution

- Poisson distribution

TODO: explain, another important relationship is

3.3.2.2 Normal/Gaussian distribution

\[ N(x \,|\, \mu, \sigma^2) = \frac{1}{\sqrt{2\,\pi\:\sigma^2}} \: \exp\left(\frac{-(x-\mu)^2}{2\,\sigma^2}\right) \]

and in \(k\) dimensions:

\[ N(\vec{x} \,|\, \vec{\mu}, \boldsymbol{\Sigma}) = (2 \pi)^{-k/2}\:\left|\boldsymbol{\Sigma}\right|^{-1/2} \: \exp\left(\frac{-1}{2}\:(\vec{x}-\vec{\mu})^\intercal \:\boldsymbol{\Sigma}^{-1}\:(\vec{x}-\vec{\mu})\right) \]

where \(\boldsymbol{\Sigma}\) is the covariance matrix of the distribution (defined in eq. 3.2).

3.3.3 Central limit theorem

Let \(X_{1}\), \(X_{2}\), … , \(X_{n}\) be a random sample drawn from any distribution with a finite mean \(\mu\) and variance \(\sigma^{2}\). As \(n \rightarrow \infty\), the distribution of

\[ \frac{\bar{X} - \mu}{\sigma/\sqrt{n}} \sim N(0, 1) \]

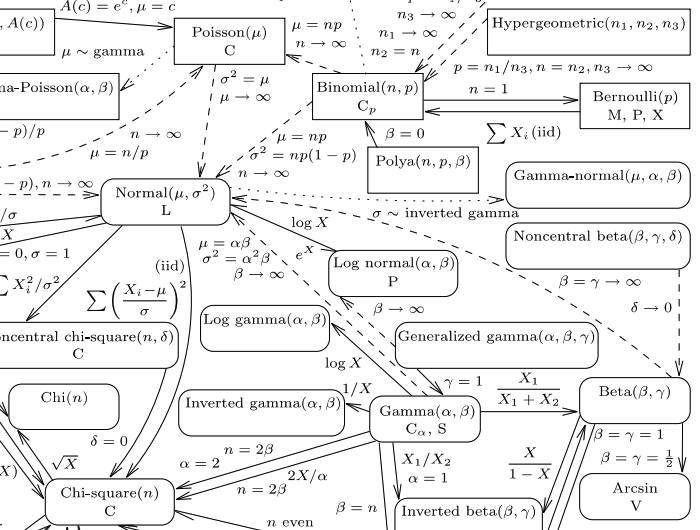

- \(\chi^2\) distribution

- Univariate distribution relationships

47 Leemis & McQueston (2008).

- The exponential family of distributions are maximum entropy distributions.

3.3.4 Mixture models

- Gaussian mixture models (GMM)

- Marked poisson

- pyhf model description

- HistFactory 48

48 Cranmer, K. et al. (2012).

3.4 Point estimation and confidence intervals

3.4.1 Inverse problems

Recall that in the context of parametric models of data, \(x_i\) the pdf of which is modeled by a function, \(f(x_i ; \theta_j)\) with parameters, \(\theta_j\). In a statistical inverse problem, the goal is to infer values of the model parameters, \(\theta_j\) given some finite set of data, \(\{x_i\}\) sampled from a probability density, \(f(x_i; \theta_j)\) that models the data reasonably well 49.

49 This assumption that the model models the data “reasonably” well reflects that to the degree required by your analysis, the important features of the data match well within the systematic uncertainties parametrized within the model. If the model is incomplete because it is missing an important feature of the data, then this is the “ugly” (class-3) error in the Sinervo classification of systematic uncertainties.

- Inverse problem

- Inverse probability (Fisher)

- Statistical inference

- See also: Structural realism

- Estimators

- Regression

- Accuracy vs precision 50

3.4.2 Bias and variance

The bias of an estimator, \(\hat\theta\), is defined as

\[ \mathrm{Bias}(\hat{\theta}) \equiv \mathbb{E}(\hat{\theta} - \theta) = \int dx \: P(x|\theta) \: (\hat{\theta} - \theta) \]

The mean squared error (MSE) of an estimator has a similar formula to variance (eq. 3.1) except that instead of quantifying the square of the difference of the estimator and its expected value, the MSE uses the square of the difference of the estimator and the true parameter:

\[ \mathrm{MSE}(\hat{\theta}) \equiv \mathbb{E}((\hat{\theta} - \theta)^2) \]

The MSE of an estimator can be related to its bias and its variance by the following proof:

\[ \begin{align} \mathrm{MSE}(\hat{\theta}) &= \mathbb{E}(\hat{\theta}^2 - 2 \: \hat{\theta} \: \theta + \theta^2) \\ &= \mathbb{E}(\hat{\theta}^2) - 2 \: \mathbb{E}(\hat{\theta}) \: \theta + \theta^2 \end{align} \]

noting that

\[ \mathrm{Var}(\hat{\theta}) = \mathbb{E}(\hat{\theta}^2) - \mathbb{E}(\hat{\theta})^2 \]

and

\[ \begin{align} \mathrm{Bias}(\hat{\theta})^2 &= \mathbb{E}(\hat{\theta} - \theta)^2 \\ &= \mathbb{E}(\hat{\theta})^2 - 2 \: \mathbb{E}(\hat{\theta}) \: \theta + \theta^2 \end{align} \]

we see that MSE is equivalent to

\[ \mathrm{MSE}(\hat{\theta}) = \mathrm{Var}(\hat{\theta}) + \mathrm{Bias}(\hat{\theta})^2 \]

For an unbiased estimator, the MSE is the variance of the estimator.

TODO:

- Note the discussion of the bias-variance tradeoff by Cranmer.

- Note the new deep learning view. See Deep learning.

See also:

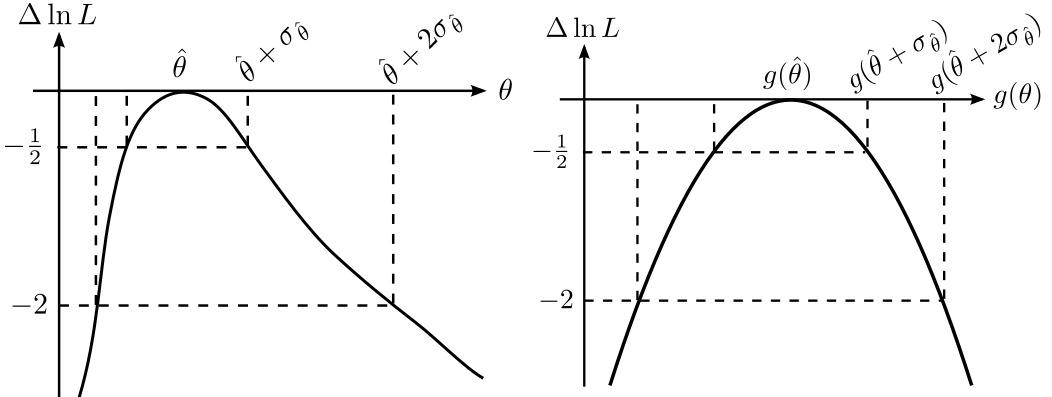

3.4.3 Maximum likelihood estimation

A maximum likelihood estimator (MLE) was first used by Fisher. 51

51 Aldrich (1997).

\[\hat{\theta} \equiv \underset{\theta}{\mathrm{argmax}} \: \mathrm{log} \: L(\theta) \]

Maximizing \(\mathrm{log} \: L(\theta)\) is equivalent to maximizing \(L(\theta)\), and the former is more convenient because for data that are independent and identically distributed (i.i.d.) the joint likelihood can be factored into a product of individual measurements:

\[ L(\theta) = \prod_i L(\theta|x_i) = \prod_i P(x_i|\theta) \]

and taking the log of the product makes it a sum:

\[ \mathrm{log} \: L(\theta) = \sum_i \mathrm{log} \: L(\theta|x_i) = \sum_i \mathrm{log} \: P(x_i|\theta) \]

Maximizing \(\mathrm{log} \: L(\theta)\) is also equivalent to minimizing \(-\mathrm{log} \: L(\theta)\), the negative log-likelihood (NLL). For distributions that are i.i.d.,

\[ \mathrm{NLL} \equiv - \log L = - \log \prod_i L_i = - \sum_i \log L_i = \sum_i \mathrm{NLL}_i \]

3.4.3.1 Invariance of likelihoods under reparametrization

- Likelihoods are invariant under reparametrization. 52

- Bayesian posteriors are not invariant in general.

52 F. James (2006), p. 234.

See also:

3.4.3.2 Ordinary least squares

3.4.4 Variance of MLEs

- Taylor expansion of a likelihood near its maximum

- Cramér-Rao bound 55

- Define efficiency of an estimator.

- Common formula for variance of unbiased and efficient estimators

- Proof in Rice 56

- Cranmer: Cramér-Rao bound

- Nielsen, F. (2013). Cramer-Rao lower bound and information geometry. 57

- Under some reasonable conditions, one can show that MLEs are efficient and unbiased. TODO: find ref.

- Fisher information matrix

- “is the key part of the proof of Wilks’ theorem, which allows confidence region estimates for maximum likelihood estimation (for those conditions for which it applies) without needing the Likelihood Principle.”

- Confidence intervals

- Variance of MLEs

- Wilks’s theorem

- Method of \(\Delta\chi^2\) or \(\Delta{}L\)

- Frequentist confidence intervals (e.g. at 95% CL)

- Cowan 58

- Likelihood need not be Gaussian 59

- Minos method in particle physics in MINUIT 60

- See slides for my talk: Primer on statistics: MLE, Confidence Intervals, and Hypothesis Testing

- Asymptotics

- See Asymptotics.

56 Rice (2007), p. 300–2.

57 Nielsen (2013).

58 Cowan (1998), p. 130-5.

59 F. James (2006), p. 234.

60 F. James & Roos (1975).

- Common error bars

- Poisson error bars

- Gaussian approximation: \(\sqrt{n}\)

- Wilson-Hilferty approximation

- Binomial error bars

- Error on efficiency or proportion

- See: Statistical classification

- Poisson error bars

- More on confidence intervals

- Loh, W.Y. (1987). Calibrating confidence coefficients. 61

- Discussion

- Wainer, H. (2007). The most dangerous equation. (de Moivre’s equation for variance of means) 62

- Misc

- Karhunen-Loève eigenvalue problems in cosmology: How should we tackle large data sets? 63

3.4.5 Bayesian credibility intervals

- Inverse problem to find a posterior probability distribution.

- Maximum a posteriori estimation (MAP)

- Prior sensitivity

- Betancourt, M. (2018). Towards a principled Bayesian workflow - ipynb

- Not invariant to reparametrization in general

- Jeffreys priors are

- TODO: James

3.4.6 Uncertainty on measuring an efficiency

- Binomial proportion confidence interval

- Normal/Gaussian/Wald interval

- Wilson score interval

- Clopper-Pearson interval (1934) 64

- Agresti-Coull interval (1998) 65

- Rule of three (1983) 66

- Review by Brown, Cai, & DasGupta (2001) 67

- Casadei, D. (2012). Estimating the selection efficiency. 68

- Precision vs recall for classification, again

- Classification and logistic regression

- See also:

- Logistic regression in the section on Classical machine learning.

- Clustering in the section on Classical machine learning.

3.4.7 Examples

- Some sample mean

- Bayesian lighthouse

- Measuring an efficiency

- Some HEP fit

3.5 Statistical hypothesis testing

3.5.1 Null hypothesis significance testing

- Karl Pearson observing how rare sequences of roulette spins are

- Null hypothesis significance testing (NHST)

- goodness of fit

- Fisher

Fisher:

3.5.2 Neyman-Pearson theory

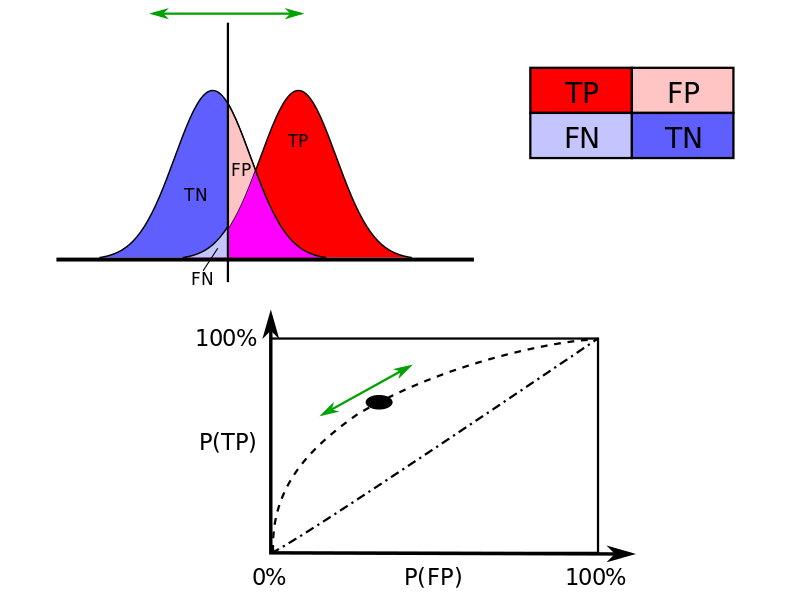

3.5.2.1 Introduction

- probes an alternative hypothesis 70

- Type-1 and type-2 errors

- Power and confidence

- Cohen, J. (1992). A power primer. 71

- Cranmer, K. (2020). Thumbnail of LHC statistical procedures.

- ATLAS and CMS Collaborations. (2011). Procedure for the LHC Higgs boson search combination in Summer 2011. 72

- Cowan, G., Cranmer, K., Gross, E., & Vitells, O. (2011). Asymptotic formulae for likelihood-based tests of new physics. 73

70 Goodman (1999a). p. 998.

71 J. Cohen (1992).

72 ATLAS and CMS Collaborations (2011).

73 Cowan, Cranmer, Gross, & Vitells (2011).

See also:

3.5.2.2 Neyman-Pearson lemma

Neyman-Pearson lemma: 74

74 Neyman & Pearson (1933).

For a fixed signal efficiency, \(1-\alpha\), the selection that corresponds to the lowest possible misidentification probability, \(\beta\), is given by

\[ \frac{L(H_1)}{L(H_0)} > k_{\alpha} \]

where \(k_{\alpha}\) is the cut value required to achieve a type-1 error rate of \(\alpha\).

Neyman-Pearson test statistic:

\[ q_\mathrm{NP} = - 2 \ln \frac{L(H_1)}{L(H_0)} \]

Profile likelihood ratio:

\[ \lambda(\mu) = \frac{ L(\mu, \hat{\theta}_\mu) }{ L(\hat{\mu}, \hat{\theta}) } \]

where \(\hat{\theta}\) is the (unconditional) maximum-likelihood estimator that maximizes \(L\), while \(\hat{\theta}_\mu\) is the conditional maximum-likelihood estimator that maximizes \(L\) for a specified signal strength, \(\mu\), and \(\theta\) as a vector includes all other parameters of interest and nuisance parameters.

3.5.2.3 Neyman construction

Cranmer: Neyman construction.

TODO: fix

\[ q = - 2 \ln \frac{L(\mu\,s + b)}{L(b)} \]

3.5.2.4 Flip-flopping

- Flip-flopping and Feldman-Cousins confidence intervals 75

75 Feldman & Cousins (1998).

3.5.3 p-values and significance

- \(p\)-values and significance 76

- Coverage

- Fisherian vs Neyman-Pearson \(p\)-values

Cowan et al. define a \(p\)-value as

a probability, under assumption of \(H\), of finding data of equal or greater incompatibility with the predictions of \(H\). 77

77 Cowan et al. (2011), p. 2–3.

Also:

It should be emphasized that in an actual scientific context, rejecting the background-only hypothesis in a statistical sense is only part of discovering a new phenomenon. One’s degree of belief that a new process is present will depend in general on other factors as well, such as the plausibility of the new signal hypothesis and the degree to which it can describe the data. Here, however, we only consider the task of determining the \(p\)-value of the background-only hypothesis; if it is found below a specified threshold, we regard this as “discovery”. 78

78 Cowan et al. (2011), p. 3.

3.5.3.1 Uppper limits

- Cousins, R.D. & Highland, V.L. (1992). Incorporating systematic uncertainties into an upper limit. 79

79 Cousins & Highland (1992).

3.5.3.2 CLs method

3.5.4 Asymptotics

- Analytic variance of the likelihood-ratio of gaussians: \(\chi^2\)

- Pearson \(\chi^2\)-test

- Wilks 83

- Under the null hypothesis, \(-2 \ln(\lambda) \sim \chi^{2}_{k}\), where \(k\), the degrees of freedom for the \(\chi^{2}\) distribution is the number of parameters of interest (including signal strength) in the signal model but not in the null hypothesis background model.

- Wald 84

- Wald generalized the work of Wilks for the case of testing some nonzero signal for exclusion, showing \(-2 \ln(\lambda) \approx (\hat{\theta} - \theta)^\intercal V^{-1} (\hat{\theta} - \theta) \sim \mathrm{noncentral}\:\chi^{2}_{k}\).

- In the simplest case where there is only one parameter of interest (the signal strength, \(\mu\)), then \(-2 \ln(\lambda) \approx \frac{ (\hat{\mu} - \mu)^{2} }{ \sigma^2 } \sim \mathrm{noncentral}\:\chi^{2}_{1}\).

- Cowan, G., Cranmer, K., Gross, E., & Vitells, O. (2011). Asymptotic formulae for likelihood-based tests of new physics. 85

- Wald approximation

- Asimov dataset

- Talk by Armbruster: Asymptotic formulae (2013).

- Cowan, G., Cranmer, K., Gross, E., & Vitells, O. (2012). Asymptotic distribution for two-sided tests with lower and upper boundaries on the parameter of interest. 86

- Bhattiprolu, P.N., Martin, S.P., & Wells, J.D. (2020). Criteria for projected discovery and exclusion sensitivities of counting experiments. 87

3.5.5 Student’s t-test

- Student’s t-test

- ANOVA

- A/B-testing

3.5.6 Decision theory

- Suppes, P. (1961). The philosophical relevance of decision theory. The Journal of Philosophy, 58, 605–614. http://www.jstor.org/stable/2023536

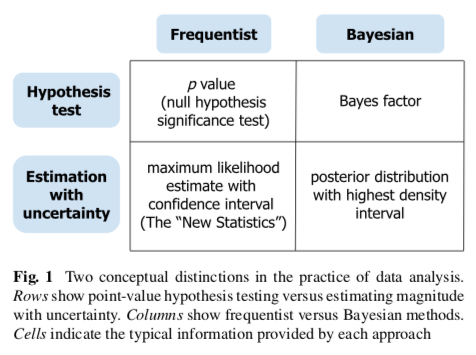

- Frequentist vs bayesian decision theory 88

- Goodman, S.N. (1999). Toward evidence-based medical statistics 2: The Bayes factor. 89

Support for using Bayes factors:

which properly separates issues of long-run behavior from evidential strength and allows the integration of background knowledge with statistical findings. 90

90 Goodman (1999a). p. 995.

See also:

3.5.7 Examples

- Difference of two means: \(t\)-test

- A/B-testing

- New physics

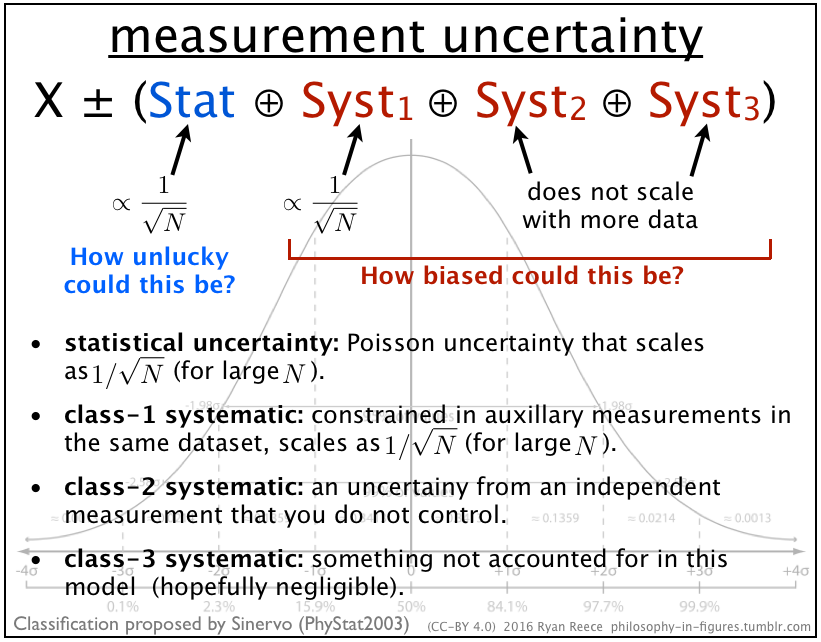

3.6 Uncertainty quantification

3.6.1 Sinervo classification of systematic uncertainties

- Class-1, class-2, and class-3 systematic uncertanties (good, bad, ugly), Classification by Pekka Sinervo (PhyStat2003) 91

- Not to be confused with type-1 and type-2 errors in Neyman-Pearson theory

- Heinrich, J. & Lyons, L. (2007). Systematic errors. 92

- Caldeira & Nord 93

Lyons:

In analyses involving enough data to achieve reasonable statistical accuracy, considerably more effort is devoted to assessing the systematic error than to determining the parameter of interest and its statistical error. 94

94 Lyons (2008), p. 890.

- Poincaré’s three levels of ignorance

3.6.2 Profile likelihoods

- Profiling and the profile likelihood

- Importance of Wald and Cowan et al.

- hybrid Bayesian-frequentist method

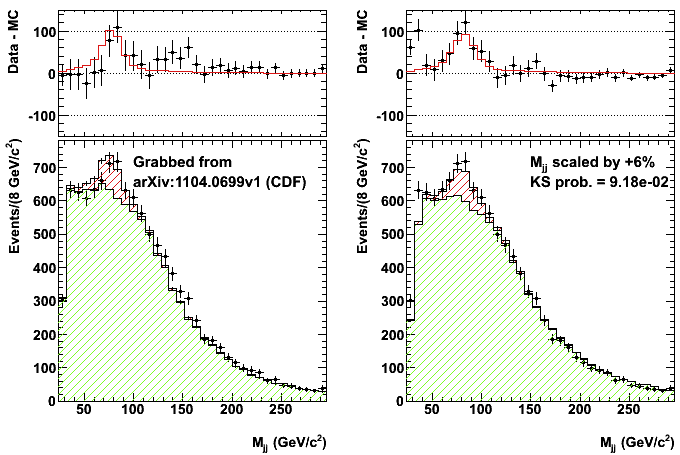

3.6.3 Examples of poor estimates of systematic uncertanties

- Unaccounted-for effects

- CDF \(Wjj\) bump

- Phys.Rev.Lett.106:171801 (2011) / arxiv:1104.0699

- Invariant mass distribution of jet pairs produced in association with a \(W\) boson in \(p\bar{p}\) collisions at \(\sqrt{s}\) = 1.96 TeV

- Dorigo, T. (2011). The jet energy scale as an explanation of the CDF signal.

- OPERA. (2011). Faster-than-light neutrinos.

- BICEP2 claimed evidence of B-modes in the CMB as evidence of cosmic inflation without accounting for cosmic dust.

3.6.4 Conformal prediction

- Conformal prediction

- Gammerman, A., Vovk, V., & Vapnik, V. (1998). Learning by transduction. 95

95 Gammerman, Vovk, & Vapnik (1998).

3.7 Statistical classification

3.7.1 Introduction

- Precision vs recall

- Recall is sensitivity

- Sensitivity vs specificity

- Accuracy

3.7.2 Examples

- TODO

See also:

3.8 Causal inference

3.8.1 Introduction

- Rubin, D. B. (1974). Estimating causal effects of treatments in randomized and nonrandomized studies. 96

- Lewis, D. (1981). Causal decision theory. 97

- Pearl, J. (2018). The Book of Why: The new science of cause and effect. 98

- Bareinboim, E., Correa, J.D., Ibeling, D., & Icard, T. (2021). On Pearl’s hierarchy and the foundations of causal inference. 99

See also:

3.8.2 Causal models

- Structural Causal Model (SCM)

- Pearl, J. (2009). Causal inference in statistics: An overview. 100

- Robins, J.M. & Wasserman, L. (1999). On the impossibility of inferring causation from association without background knowledge. 101

- Peters, J., Janzing, D., & Schölkopf, B. (2017). Elements of Causal Inference. 102

- Lundberg, I., Johnson, R., & Stewart, B.M. (2021). What is your estimand? Defining the target quantity connects statistical evidence to theory. 103

3.8.3 Counterfactuals

- Counterfactuals

- Regret

- Interventionist conception of causation

- Ismael, J. (2023). Reflections on the asymmetry of causation. 104

- Chevalley, M., Schwab, P., & Mehrjou, A. (2024). Deriving causal order from single-variable interventions: Guarantees & algorithm. 105

3.9 Exploratory data analysis

3.9.1 Introduction

- William Playfair (1759-1823)

- Father of statistical graphics

- John Tukey (1915-2000)

- Exploratory data analysis

- Exploratory Data Analysis (1977) 106

106 Tukey (1977).

3.9.2 Look-elsewhere effect

- Look-elsewhere effect (LEE)

- AKA File-drawer effect

- Stopping rules

- validation dataset

- statistical issues, violates the likelihood principle

3.9.3 Archiving and data science

- “Data science”

- Data collection, quality, analysis, archival, and reinterpretation

- Scientific research and big data

- Reproducible an reinterpretable

- RECAST

- Chen, X. et al. (2018). Open is not enough. 107

107 Chen, X. et al. (2018).

3.10 “Statistics Wars”

3.10.1 Introduction

Cranmer:

Bayes’s theorem is a theorem, so there’s no debating it. It is not the case that Frequentists dispute whether Bayes’s theorem is true. The debate is whether the necessary probabilities exist in the first place. If one can define the joint probability \(P (A, B)\) in a frequentist way, then a Frequentist is perfectly happy using Bayes theorem. Thus, the debate starts at the very definition of probability. 110

110 Cranmer (2015), p. 6.

Neyman:

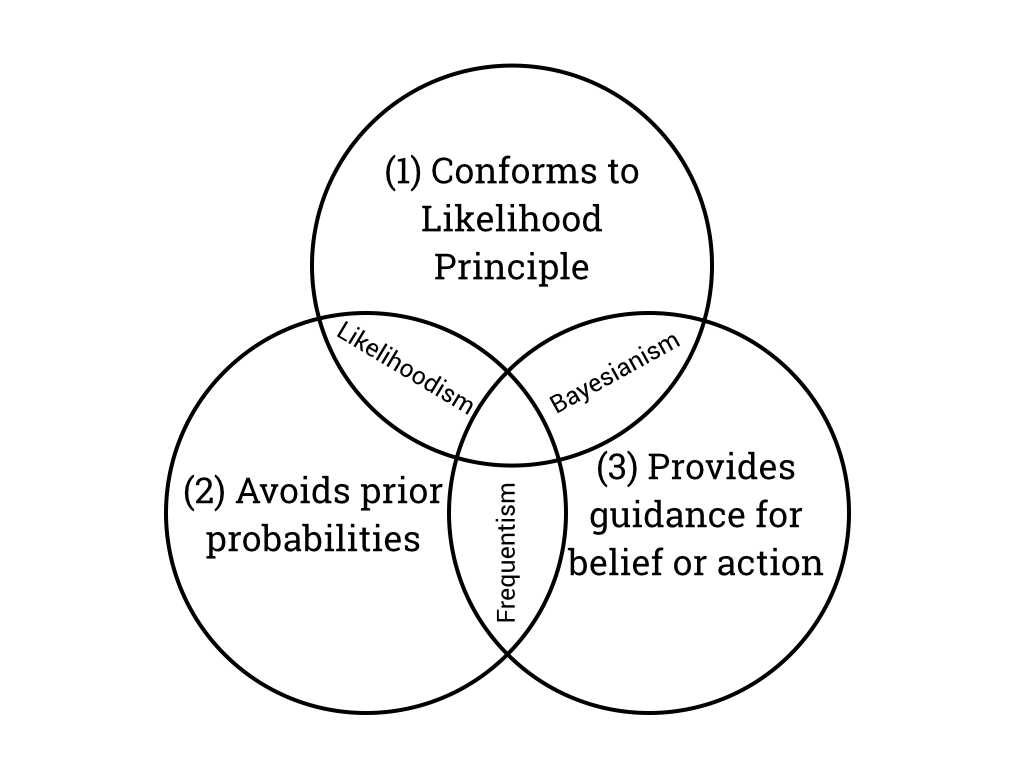

3.10.2 Likelihood principle

- Likelihood principle

- The likelihood principle is the proposition that, given a statistical model and a data sample, all the evidence relevant to model parameters is contained in the likelihood function.

- The history of likelihood 113

- Allan Birnbaum proved that the likelihood principle follows from two more primitive and seemingly reasonable principles, the conditionality principle and the sufficiency principle. 114

- Hacking identified the “law of likelihood”. 115

- Berger & Wolpert. (1988). The Likelihood Principle. 116

O’Hagan:

The first key argument in favour of the Bayesian approach can be called the axiomatic argument. We can formulate systems of axioms of good inference, and under some persuasive axiom systems it can be proved that Bayesian inference is a consequence of adopting any of these systems… If one adopts two principles known as ancillarity and sufficiency principles, then under some statement of these principles it follows that one must adopt another known as the likelihood principle. Bayesian inference conforms to the likelihood principle whereas classical inference does not. Classical procedures regularly violate the likelihood principle or one or more of the other axioms of good inference. There are no such arguments in favour of classical inference. 117

117 O’Hagan (2010), p. 17–18.

- Gandenberger

- “A new proof of the likelihood principle” 118

- Thesis: Two Principles of Evidence and Their Implications for the Philosophy of Scientific Method (2015)

- gandenberger.org/research

- Do frequentist methods violate the likelihood principle?

- Criticisms:

- Evans 119

- Mayo 120

- Mayo: The law of likelihood and error statistics 121

- Mayo’s response to Hennig and Gandenberger

- Dawid, A.P. (2014). Discussion of “On the Birnbaum Argument for the Strong Likelihood Principle”. 122

- Likelihoodist statistics

Mayo:

Likelihoods are vital to all statistical accounts, but they are often misunderstood because the data are fixed and the hypothesis varies. Likelihoods of hypotheses should not be confused with their probabilities. … [T]he same phenomenon may be perfectly predicted or explained by two rival theories; so both theories are equally likely on the data, even though they cannot both be true. 123

123 Mayo (2019).

3.10.3 Discussion

Lyons:

Particle Physicists tend to favor a frequentist method. This is because we really do consider that our data are representative as samples drawn according to the model we are using (decay time distributions often are exponential; the counts in repeated time intervals do follow a Poisson distribution, etc.), and hence we want to use a statistical approach that allows the data “to speak for themselves,” rather than our analysis being dominated by our assumptions and beliefs, as embodied in Bayesian priors. 124

124 Lyons (2008), p. 891.

- Carnap

- Carnap, R. (1952). The Continuum of Inductive Methods. 125

- Carnap, R. (1960). Logical Foundations of Probability. 126

- Sznajder on the alleged evolution of Carnap’s views of inductive logic 127

- David Cox

- Ian Hacking

- Logic of Statistical Inference 128

- Neyman

- “Frequentist probability and frequentist statistics” 129

- Rozeboom

- Rozeboom, W.W. (1960). The fallacy of the null-hypothesis significance test. 130

- Meehl

- Meehl, P. E. (1978). Theoretical risks and tabular asterisks: Sir Karl, Sir Ronald, and the slow progress of soft psychology. 131

- Zech

- “Comparing statistical data to Monte Carlo simulation” 132

- Richard Royall

- Statistical Evidence: A likelihood paradigm 133

- Jim Berger

- “Could Fisher, Jeffreys, and Neyman have agreed on testing?” 134

- Kendall & Stuart

- “The fiducialist argument rests on the assumption that \(\mathrm{probability}_{2}\) can be converted into \(\mathrm{probability}_{1}\) by means of a pivoting operation.” 135

- Deborah Mayo

- “In defense of the Neyman-Pearson theory of confidence intervals” 136

- Concept of “Learning from error” in Error and the Growth of Experimental Knowledge 137

- “Severe testing as a basic concept in a Neyman-Pearson philosophy of induction” 138

- “Error statistics” 139

- Statistical Inference as Severe Testing 140

- Statistics Wars: Interview with Deborah Mayo - APA blog

- Review of SIST by Prasanta S. Bandyopadhyay

- LSE Research Seminar: Current Controversies in Phil Stat (May 21, 2020)

- Meeting 5 (June 18, 2020)

- Slides: The Statistics Wars and Their Casualties

- Andrew Gelman

- Confirmationist and falsificationist paradigms of science - Sept. 5, 2014

- Beyond subjective and objective in statistics 141

- Retire Statistical Significance: The discussion

- Exchange with Deborah Mayo on abandoning statistical significance

- Several reviews of SIST

- Larry Wasserman

- Kevin Murphy

- Greg Gandenberger

- An introduction to likelihoodist, bayesian, and frequentist methods (1/3)

- As Neyman and Pearson put it in their original presentation of the frequentist approach, “without hoping to know whether each separate hypothesis is true or false, we may search for rules to govern our behavior with regard to them, in the following which we insure that, in the long run of experience, we shall not too often be wrong” (1933, 291).

- An introduction to likelihoodist, bayesian, and frequentist methods (2/3)

- An introduction to likelihoodist, bayesian, and frequentist methods (3/3)

- An argument against likelihoodist methods as genuine alternatives to bayesian and frequentist methods

- “Why I am not a likelihoodist” 144

- Jon Wakefield

- Bayesian and Frequentist Regression Methods 145

- Efron & Hastie

- “Flaws in Frequentist Inference” 146

- Kruschke & Liddel 147

- Steinhardt, J. (2012). Beyond Bayesians and frequentists. 148

- VanderPlas, J. (2014). Frequentism and Bayesianism III: Confidence, credibility, and why frequentism and science do not mix.

- Kent, B. (2021). No, your confidence interval is not a worst-case analysis.

- Aubrey Clayton

- Clayton, A. (2021). Bernoulli’s Fallacy: Statistical Illogic and the Crisis of Modern Science. 149

- Wagenmakers, E.J. (2021). Review: Bernoulli’s Fallacy. 150

125 Carnap (1952).

126 Carnap (1960).

127 Sznajder (2018).

128 Hacking (1965).

129 Neyman (1977).

130 Rozeboom (1960).

131 Meehl (1978).

132 Zech (1995).

133 Royall (1997).

134 Berger (2003).

135 Stuart et al. (2010), p. 460.

136 Mayo (1981).

137 Mayo (1996).

138 Mayo & Spanos (2006).

139 Mayo & Spanos (2011).

140 Mayo (2018).

141 Gelman & Hennig (2017).

142 Murphy (2012), ch. 6.6.

143 Murphy (2022), p. 195–198.

144 Gandenberger (2016).

145 Wakefield (2013), ch. 4.

146 Efron & Hastie (2016), p. 30–36.

147 Kruschke & Liddell (2018).

148 Steinhardt (2012).

149 Clayton (2021).

150 Wagenmakers (2021).

Goodman:

The idea that the \(P\) value can play both of these roles is based on a fallacy: that an event can be viewed simultaneously both from a long-run and a short-run perspective. In the long-run perspective, which is error-based and deductive, we group the observed result together with other outcomes that might have occurred in hypothetical repetitions of the experiment. In the “short run” perspective, which is evidential and inductive, we try to evaluate the meaning of the observed result from a single experiment. If we could combine these perspectives, it would mean that inductive ends (drawing scientific conclusions) could be served with purely deductive methods (objective probability calculations). 151

151 Goodman (1999a). p. 999.

3.11 Replication crisis

3.11.1 Introduction

- Ioannidis, J.P. (2005). Why most published research findings are false. 152

152 Ioannidis (2005).

3.11.2 p-value controversy

- Wasserstein, R.L. & Lazar, N.A. (2016). The ASA’s statement on \(p\)-values: Context, process, and purpose. 153

- Wasserstein, R.L., Allen, L.S., & Lazar, N.A. (2019). Moving to a World Beyond “p<0.05”. 154

- Big names in statistics want to shake up much-maligned P value 155

- Hi-Phi Nation, episode 7

- Fisher:

153 Wasserstein & Lazar (2016).

154 Wasserstein, Allen, & Lazar (2019).

155 Benjamin, D.J. et al. (2017).

[N]o isolated experiment, however significant in itself, can suffice for the experimental demonstration of any natural phenomenon; for the “one chance in a million” will undoubtedly occur, with no less and no more than its appropriate frequency, however surprised we may be that it should occur to us. In order to assert that a natural phenomenon is experimentally demonstrable we need, not an isolated record, but a reliable method of procedure. In relation to the test of significance, we may say that a phenomenon is experimentally demonstrable when we know how to conduct an experiment which will rarely fail to give us a statistically significant result. 156

156 Fisher (1935), p. 13–14.

- Relationship to the LEE

- Tukey, John (1915-2000)

- Wasserman

- Rao & Lovric

- Rao, C.R. & Lovric, M.M. (2016). Testing point null hypothesis of a normal mean and the truth: 21st century perspective. 157

- Mayo

- “Les stats, c’est moi: We take that step here!”

- “Significance tests: Vitiated or vindicated by the replication crisis in psychology?” 158

- At long last! The ASA President’s Task Force Statement on Statistical Significance and Replicability

- Gorard & Gorard. (2016). What to do instead of significance testing. 159

- Vox: What a nerdy debate about p-values shows about science–and how to fix it

- Karen Kafadar: The Year in Review … And More to Come

- The JASA Reproducibility Guide

From “The ASA president’s task force statement on statistical significance and replicability”:

P-values are valid statistical measures that provide convenient conventions for communicating the uncertainty inherent in quantitative results. Indeed, P-values and significance tests are among the most studied and best understood statistical procedures in the statistics literature. They are important tools that have advanced science through their proper application. 160

160 Benjamini, Y. et al. (2021), p. 1.

More:

- Bassily, R., Nissim, K., Smith, A., Steinke, T., Stemmer, U., & Ullman, J. (2015). Algorithmic stability for adaptive data analysis. 161

161 Bassily, R. et al. (2015).

3.12 Classical machine learning

3.12.1 Introduction

- Classification vs regression

- Supervised and unsupervised learning

- Classification = supervised; clustering = unsupervised

- Hastie, Tibshirani, & Friedman 162

- Information Theory, Inference, and Learning 163

- Murphy, K.P. (2012). Machine Learning: A probabilistic perspective. MIT Press. 164

- Murphy, K.P. (2022). Probabilistic Machine Learning: An introduction. MIT Press. 165

- Shalev-Shwarz, S. & Ben-David, S. (2014). Understanding Machine Learning: From Theory to Algorithms. 166

- VC-dimension

3.12.2 History

- History of artificial intelligence

- Arthur Samuel (1901-1990)

- Dartmouth workshop (1956)

- McCarthy, J., Minsky, M.L., Rochester, N., & Shannon, C.E. (1955). A proposal for the Dartmouth Summer Research Project on Artificial Intelligence. 169

- Solomonoff, G. (2016). Ray Solomonoff and the Dartmouth Summer Research Project in Artificial Intelligence, 1956. 170

- Rudolf Carnap (1891-1970)

- Kardum, M. (2020). Rudolf Carnap–The grandfather of artificial neural networks: The influence of Carnap’s philosophy on Walter Pitts. 171

- Bright, L.K. (2022). Carnap’s contributions.

- McCulloch & Pitts

- McCulloch, W. & Pitts, W. (1943). A logical calculus of ideas immanent in nervous activity. 172

- Anderson, J.A. & Rosenfeld, E. (1998). Talking Nets: An oral history of neural networks. 173

- Gefter, A. (2015). The man who tried to redeem the world with logic.

- Perceptron

- Rosenblatt, F. (1961). Principles of Neurodynamics: Perceptrons and the Theory of Brain Mechanisms. 174

- Sompolinsky, H. (2013). Introduction: The Perceptron.

- Connectionist vs symbolic AI

- Minsky, M. & Papert, S. (1969). Perceptrons: An Introduction to Computational Geometry. 175

- AI Winter

- Cartwright, N. (2001). What is wrong with Bayes nets?. 176

169 McCarthy, Minsky, Rochester, & Shannon (1955).

170 Solomonoff (2016).

171 Kardum (2020).

172 McCulloch & Pitts (1943).

173 J. A. Anderson & Rosenfeld (1998).

174 Rosenblatt (1961).

175 Minsky & Papert (1969).

176 Cartwright (2001).

See also:

- Honorific reinterpretation of scientism

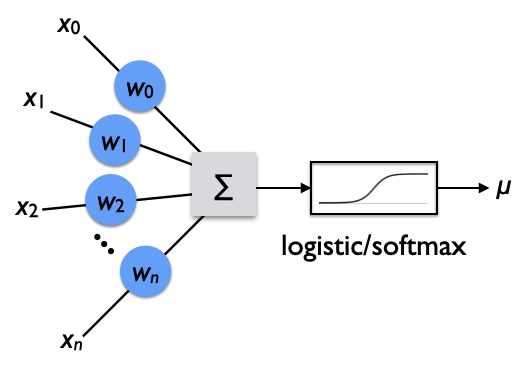

3.12.3 Logistic regression

From a probabilistic point of view, 177 logistic regression can be derived from doing maximum likelihood estimation of a vector of model parameters, \(\vec{w}\), in a dot product with the input features, \(\vec{x}\), and squashed with a logistic function that yields the probability, \(\mu\), of a Bernoulli random variable, \(y \in \{0, 1\}\).

177 Murphy (2012), p. 21.

\[ p(y | \vec{x}, \vec{w}) = \mathrm{Ber}(y | \mu(\vec{x}, \vec{w})) = \mu(\vec{x}, \vec{w})^y \: (1-\mu(\vec{x}, \vec{w}))^{(1-y)} \]

The negative log-likelihood of multiple trials is

\[ \begin{align} \mathrm{NLL} &= - \sum_i \log p(y_i | \vec{x}_i, \vec{w}) \\ &= - \sum_i \log\left( \mu(\vec{x}_i, \vec{w})^{y_i} \: (1-\mu(\vec{x}_i, \vec{w}))^{(1-y_i)} \right) \\ &= - \sum_i \log\left( \mu_i^{y_i} \: (1-\mu_i)^{(1-y_i)} \right) \\ &= - \sum_i \big( y_i \, \log \mu_i + (1-y_i) \log(1-\mu_i) \big) \end{align} \]

which is the cross entropy loss. Note that the first term is non-zero only when the true target is \(y_i=1\), and similarly the second term is non-zero only when \(y_i=0\). 178 Therefore, we can reparametrize the target \(y_i\) in favor of \(t_{ki}\) that is one-hot in an index \(k\) over classes.

178 Note: Label smoothing is a regularization technique that smears the activation over other labels, but we don’t do that here.

\[ \mathrm{CEL} = \mathrm{NLL} = - \sum_i \sum_k \big( t_{ki} \, \log \mu_{ki} \big) \]

where

\[ t_{ki} = \begin{cases} 1 & \mathrm{if}\ (k = y_i = 0)\ \mathrm{or}\ (k = y_i = 1) \\ 0 & \mathrm{otherwise} \end{cases} \]

and

\[ \mu_{ki} = \begin{cases} 1-\mu_i & \mathrm{if}\ k = 0 \\ \mu_i & \mathrm{if}\ k =1 \end{cases} \]

This readily generalizes from binary classification to classification over many classes as we will discuss more below. Note that in the sum over classes, \(k\), only one term for the true class contributes.

\[ \mathrm{CEL} = - \left. \sum_i \log \mu_{ki} \right|_{k\ \mathrm{is\ such\ that}\ y_k=1} \tag{3.3}\]

Logistic regression uses the logit function 179, which is the logarithm of the odds—the ratio of the chance of success to failure. Let \(\mu\) be the probability of success in a Bernoulli trial, then the logit function is defined as

179 “Logit” was coined by Joseph Berkson (1899-1982).

\[ \mathrm{logit}(\mu) \equiv \log\left(\frac{\mu}{1-\mu}\right) \]

Logistic regression assumes that the logit function is a linear function of the explanatory variable, \(x\).

\[ \log\left(\frac{\mu}{1-\mu}\right) = \beta_0 + \beta_1 x \]

where \(\beta_0\) and \(\beta_1\) are trainable parameters. (TODO: Why would we assume this?) This can be generalized to a vector of multiple input variables, \(\vec{x}\), where the input vector has a 1 prepended to be its zeroth component in order to conveniently include the bias, \(\beta_0\), in a dot product.

\[ \vec{x} = (1, x_1, x_2, \ldots, x_n)^\intercal \]

\[ \vec{w} = (\beta_0, \beta_1, \beta_2, \ldots, \beta_n)^\intercal \]

\[ \log\left(\frac{\mu}{1-\mu}\right) = \vec{w}^\intercal \vec{x} \]

For the moment, let \(z \equiv \vec{w}^\intercal \vec{x}\). Exponentiating and solving for \(\mu\) gives

\[ \mu = \frac{ e^z }{ 1 + e^z } = \frac{ 1 }{ 1 + e^{-z} } \]

This function is called the logistic or sigmoid function.

\[ \mathrm{logistic}(z) \equiv \mathrm{sigm}(z) \equiv \frac{ 1 }{ 1 + e^{-z} } \]

Since we inverted the logit function by solving for \(\mu\), the inverse of the logit function is the logistic or sigmoid.

\[ \mathrm{logit}^{-1}(z) = \mathrm{logistic}(z) = \mathrm{sigm}(z) \]

And therefore,

\[ \mu = \mathrm{sigm}(z) = \mathrm{sigm}(\vec{w}^\intercal \vec{x}) \]

See also:

- Logistic regression

- Harlan, W.S. (2007). Bounded geometric growth: motivation for the logistic function.

- Heesch, D. A short intro to logistic regression.

- Roelants, P. (2019). Logistic classification with cross-entropy.

3.12.4 Softmax regression

Again, from a probabilistic point of view, we can derive the use of multi-class cross entropy loss by starting with the Bernoulli distribution, generalizing it to multiple classes (indexed by \(k\)) as

\[ p(y_k | \mu) = \mathrm{Cat}(y_k | \mu_k) = \prod_k {\mu_k}^{y_k} \]

which is the categorical or multinoulli distribution. The negative-log likelihood of multiple independent trials is

\[ \mathrm{NLL} = - \sum_i \log \left(\prod_k {\mu_{ki}}^{y_{ki}}\right) = - \sum_i \sum_k y_{ki} \: \log \mu_{ki} \]

Noting again that \(y_{ki} = 1\) only when \(k\) is the true class, and is 0 otherwise, this simplifies to eq. 3.3.

See also:

- Multinomial logistic regression

- McFadden 180

- Softmax is really a soft argmax. TODO: find ref.

- Softmax is not unique. There are other squashing functions. 181

- Roelants, P. (2019). Softmax classification with cross-entropy.

- Gradients from backprop through a softmax

- Goodfellow et al. point out that any negative log-likelihood is a cross entropy between the training data and the probability distribution predicted by the model. 182

3.12.5 Decision trees

- Freund, Y. & Schapire, R.E. (1997). A decision-theoretic generalization of on-line learning and an application to boosting. (AdaBoost) 183

- Chen, T. & Guestrin, C. (2016). Xgboost: A scalable tree boosting system. 184

- Aytekin, C. (2022). Neural networks are decision trees. 185

- Grinsztajn, L., Oyallon, E., & Varoquaux, G. (2022). Why do tree-based models still outperform deep learning on tabular data? 186

- Coadou, Y. (2022). Boosted decision trees. 187

3.12.6 Clustering

- unsupervised

- Gaussian Mixture Models (GMMs)

- Gaussian discriminant analysis

- \(\chi^2\)

- Generalized Linear Models (GLMs)

- Exponential family of PDFs

- Multinoulli \(\mathrm{Cat}(x|\mu)\)

- GLMs

- EM algorithm

- \(k\)-means

- Discussion

- Hartigan, J.A. (1985). Statistical theory in clustering. 188

- Clustering high-dimensional data

- t-distributed stochastic neighbor embedding (t-SNE)

- Slonim, N., Atwal, G.S., Tkacik, G. & Bialek, W. (2005). Information-based clustering. 189

- Topological data analysis

- Dindin, M. (2018). TDA To Rule Them All: ToMATo Clustering.

- Relationship of clustering and autoencoding

- Olah, C. (2014). Neural networks, manifolds, and topology.

- Batson et al. (2021). Topological obstructions to autoencoding. 190

- “What are the true clusters?” 191

- Lauc, D. (2020). Machine learning and the philosophical problems of induction. 192

- Ronen, M., Finder, S.E., & Freifeld, O. (2022). DeepDPM: Deep clustering with an unknown number of clusters. 193

- Fang, Z. et al. (2022). Is out-of-distribution detection learnable?. 194

188 Hartigan (1985).

189 Slonim, Atwal, Tkacik, & Bialek (2005).

190 Batson, Haaf, Kahn, & Roberts (2021).

191 Hennig (2015).

192 Lauc (2020), p. 103–4.

193 Ronen, Finder, & Freifeld (2022).

194 Fang, Z. et al. (2022).

See also:

3.13 Deep learning

3.13.1 Introduction

- Early contributions

- Gardner, M.W. & Dorling, S.R. (1998). Artificial neural networks (the multilayer perceptron)–a review of applications in the atmospheric sciences. 195

- Schmidhuber, J. (2024). A Nobel prize for plagiarism. 196

- Conceptual reviews of deep learning

- Lower to higher level representations 197

- LeCun, Y., Bengio, Y., & Hinton, G. (2015). Review: Deep learning. 198

- Sutskever, I. (2015). A brief overview of deep learning. 199

- Deep Learning 200

- Kaplan, J. (2019). Notes on contemporary machine learning. 201

- Raissi, M. (2017). Deep learning tutorial.

- Arora, S. (2023). Theory of Deep Learning.

- Backpropagation

- Rumelhart, D.E., Hinton, G.E., & Williams, R.J. (1986). Learning representations by back-propagating errors. 202

- Amari, S. (1993). Backpropagation and stochastic gradient descent method. 203

- Schmidhuber’s Critique of Honda Prize for Dr. Hinton.

- Schmidhuber: Who invented backpropagation?

- Scmidhuber: The most cited neural networks all build on work done in my labs.

- LeCun, Y. & Bottou, L. (1998). Efficient BackProp. 204

- Pedagogy

- Bekman, S. (2023). Machine Learning Engineering Open Book.

- Labonne, M. (2023). Large Language Model Course.

- Microsoft. (2023). Generative AI for Beginners.

- Scardapane, S. (2024). Alice’s Adventures in a Differentiable Wonderland, Vol. I: A Tour of the Land. 205

- Grover, A. (2018). Notes on deep generative models.

- Practical guides

- Bottou, L. (1998). Stochastic gradient descent tricks. 206

- Bengio, Y. (2012). Practical recommendations for gradient-based training of deep architectures.

- Hao, L. et al. (2017). Visualizing the loss landscape of neural nets.

- Discussion

- Norvig, P. (2011). On Chomsky and the Two Cultures of Statistical Learning. 207

- Sutton, R. (2019). The bitter lesson. 208

- Frankle, J. & Carbin, M. (2018). The lottery ticket hypothesis: Finding sparse, trainable neural networks. 209

- AIMyths.com

195 Gardner & Dorling (1998).

196 Schmidhuber (2024).

197 Bengio (2009).

198 LeCun, Bengio, & Hinton (2015).

199 Sutskever (2015).

200 Goodfellow et al. (2016).

201 Kaplan, J. et al. (2019).

202 Rumelhart, Hinton, & Williams (1986).

203 Amari (1993).

204 LeCun & Bottou (1998).

205 Scardapane (2024).

206 Bottou (1998).

207 Norvig (2011).

208 Sutton (2019).

209 Frankle & Carbin (2018).

3.13.2 Gradient descent

\[ \hat{f} = \underset{f \in \mathcal{F}}{\mathrm{argmin}} \underset{x \sim \mathcal{X}}{\mathbb{E}} L(f, x) \]

The workhorse algorithm for optimizing (training) model parameters is gradient descent:

\[ \vec{w}[t+1] = \vec{w}[t] - \eta \frac{\partial L}{\partial \vec{w}}[t] \]

In Stochastic Gradient Descent (SGD), you chunk the training data into minibatches (AKA batches), \(\vec{x}_{bt}\), and take a gradient descent step with each minibatch:

\[ \vec{w}[t+1] = \vec{w}[t] - \frac{\eta}{m} \sum_{i=1}^m \frac{\partial L}{\partial \vec{w}}[\vec{x}_{bt}] \]

where

- \(t \in \mathbf{N}\) is the learning step number

- \(\eta\) is the learning rate

- \(m\) is the number of samples in a minibatch, called the batch size

- \(L\) is the loss function

- \(\frac{\partial L}{\partial \vec{w}}\) is the gradient

3.13.3 Deep double descent

- Bias and variance trade-off. See Bias and variance.

- MSE vs model capacity

Papers:

- Belkin, M., Hsu, D., Ma, S., & Mandal, S. (2019). Reconciling modern machine-learning practice and the classical bias-variance trade-off. 211

- Muthukumar, V., Vodrahalli, K., Subramanian, V., & Sahai, A. (2019). Harmless interpolation of noisy data in regression. 212

- Nakkiran, P., Kaplun, G., Bansal, Y., Yang, T., Barak, B., & Sutskever, I. (2019). Deep double descent: Where bigger models and more data hurt. 213

- Chang, X., Li, Y., Oymak, S., & Thrampoulidis, C. (2020). Provable benefits of overparameterization in model compression: From double descent to pruning neural networks. 214

- Holzmüller, D. (2020). On the universality of the double descent peak in ridgeless regression. 215

- Dar, Y., Muthukumar, V., & Baraniuk, R.G. (2021). A farewell to the bias-variance tradeoff? An overview of the theory of overparameterized machine learning. 216

- Balestriero, R., Pesenti, J., & LeCun, Y. (2021). Learning in high dimension always amounts to extrapolation. 217

- Belkin, M. (2021). Fit without fear: remarkable mathematical phenomena of deep learning through the prism of interpolation. 218

- Nagarajan, V. (2021). Explaining generalization in deep learning: progress and fundamental limits. 219

- Bach, F. (2022). Learning Theory from First Principles. 220

- Barak, B. (2022). The uneasy relationship between deep learning and (classical) statistics.

- Ghosh, N. & Belkin, M. (2022). A universal trade-off between the model size, test loss, and training loss of linear predictors. 221

- Singh, S.P., Lucchi, A., Hofmann, T., & Schölkopf, B. (2022). Phenomenology of double descent in finite-width neural networks. 222

- Hastie, T., Montanari, A., Rosset, S., & Tibshirani, R. J. (2022). Surprises in high-dimensional ridgeless least squares interpolation. 223

- Bubeck, S. & Sellke, M. (2023). A universal law of robustness via isoperimetry. 224

- Gamba, M., Englesson, E., Björkman, M., & Azizpour, H. (2022). Deep double descent via smooth interpolation. 225

- Schaeffer, R. et al. (2023). Double descent demystified: Identifying, interpreting & ablating the sources of a deep learning puzzle. 226

- Yang, T. & Suzuki, J. (2023). Dropout drops double descent. 227

- Maddox, W.J., Benton, G., & Wilson, A.G. (2023). Rethinking parameter counting in deep models: Effective dimensionality revisited. 228

211 Belkin, Hsu, Ma, & Mandal (2019).

212 Muthukumar, Vodrahalli, Subramanian, & Sahai (2019).

213 Nakkiran, P. et al. (2019).

214 Chang, Li, Oymak, & Thrampoulidis (2020).

215 Holzmüller (2020).

216 Dar, Muthukumar, & Baraniuk (2021).

217 Balestriero, Pesenti, & LeCun (2021).

218 Belkin (2021).

219 Nagarajan (2021).

220 Bach (2022), p. 225–230.

221 Ghosh & Belkin (2022).

222 Singh, Lucchi, Hofmann, & Schölkopf (2022).

223 Hastie, Montanari, Rosset, & Tibshirani (2022).

224 Bubeck & Sellke (2023).

225 Gamba, Englesson, Björkman, & Azizpour (2022).

226 Schaeffer, R. et al. (2023).

227 Yang & Suzuki (2023).

228 Maddox, Benton, & Wilson (2023).

Blogs:

- Hubinger, E. (2019). Understanding deep double descent. LessWrong.

- OpenAI. (2019). Deep double descent.

- Steinhardt, J. (2022). More is different for AI. 229

- Henighan, T. et al. (2023). Superposition, memorization, and double descent. 230

Twitter threads:

- Daniela Witten. (2020). Twitter thread: The bias-variance trade-off & double descent.

- François Fleuret. (2020). Twitter thread: The double descent with polynomial regression.

- adad8m. (2022). Twitter thread: The double descent with polynomial regression.

- Peyman Milanfar. (2022). Twitter thread: The perpetually undervalued least-squares.

- Pierre Ablin. (2023). Twitter thread: The double descent with polynomial regression.

3.13.4 Regularization

Regularization = any change we make to the training algorithm in order to reduce the generalization error but not the training error. 231

231 Mishra, D. (2020). Weight Decay == L2 Regularization?

Most common regularizations:

- L2 Regularization

- L1 Regularization

- Data Augmentation

- Dropout

- Early Stopping

Papers:

- Loshchilov, I. & Hutter, F. (2019). Decoupled weight decay regularization.

- Chen, S., Dobriban, E., & Lee, J. H. (2020). A group theoretic framework for data augmentation. 232

232 S. Chen, Dobriban, & Lee (2020).

3.13.5 Batch size vs learning rate

Papers:

- Keskar, N.S. et al. (2016). On large-batch training for deep learning: Generalization gap and sharp minima.

[L]arge-batch methods tend to converge to sharp minimizers of the training and testing functions—and as is well known—sharp minima lead to poorer generalization. In contrast, small-batch methods consistently converge to flat minimizers, and our experiments support a commonly held view that this is due to the inherent noise in the gradient estimation.

Hoffer, E. et al. (2017). Train longer, generalize better: closing the generalization gap in large batch training of neural networks.

- \(\eta \propto \sqrt{m}\)

Goyal, P. et al. (2017). Accurate large minibatch SGD: Training ImageNet in 1 hour.

- \(\eta \propto m\)

You, Y. et al. (2017). Large batch training of convolutional networks.

- Layer-wise Adaptive Rate Scaling (LARS)

You, Y. et al. (2017). ImageNet training in minutes.

- Layer-wise Adaptive Rate Scaling (LARS)

Jastrzebski, S. (2018). Three factors influencing minima in SGD.

- \(\eta \propto m\)

Smith, S.L. & Le, Q.V. (2018). A Bayesian Perspective on Generalization and Stochastic Gradient Descent.

Smith, S.L. et al. (2018). Don’t decay the learning rate, increase the batch size.

- \(m \propto \eta\)

Masters, D. & Luschi, C. (2018). Revisiting small batch training for deep neural networks.

This linear scaling rule has been widely adopted, e.g., in Krizhevsky (2014), Chen et al. (2016), Bottou et al. (2016), Smith et al. (2017) and Jastrzebski et al. (2017).

On the other hand, as shown in Hoffer et al. (2017), when \(m \ll M\), the covariance matrix of the weight update \(\mathrm{Cov(\eta \Delta\theta)}\) scales linearly with the quantity \(\eta^2/m\).

This implies that, adopting the linear scaling rule, an increase in the batch size would also result in a linear increase in the covariance matrix of the weight update \(\eta \Delta\theta\). Conversely, to keep the scaling of the covariance of the weight update vector \(\eta \Delta\theta\) constant would require scaling \(\eta\) with the square root of the batch size \(m\) (Krizhevsky, 2014; Hoffer et al., 2017).

Lin, T. et al. (2020). Don’t use large mini-batches, use local SGD.

- Post-local SGD.Golmant, N. et al. (2018). On the computational inefficiency of large batch sizes for stochastic gradient descent.

Scaling the learning rate as \(\eta \propto \sqrt{m}\) attempts to keep the weight increment length statistics constant, but the distance between SGD iterates is governed more by properties of the objective function than the ratio of learning rate to batch size. This rule has also been found to be empirically sub-optimal in various problem domains. … There does not seem to be a simple training heuristic to improve large batch performance in general.

- McCandlish, S. et al. (2018). An empirical model of large-batch training.

- Critical batch size

- Shallue, C.J. et al. (2018). Measuring the effects of data parallelism on neural network training.

In all cases, as the batch size grows, there is an initial period of perfect scaling (\(b\)-fold benefit, indicated with a dashed line on the plots) where the steps needed to achieve the error goal halves for each doubling of the batch size. However, for all problems, this is followed by a region of diminishing returns that eventually leads to a regime of maximal data parallelism where additional parallelism provides no benefit whatsoever.

- Jastrzebski, S. et al. (2018). Width of minima reached by stochastic gradient descent is influenced by learning rate to batch size ratio.

- \(\eta \propto m\)

We show this experimentally in Fig. 5, where similar learning dynamics and final performance can be observed when simultaneously multiplying the learning rate and batch size by a factor up to a certain limit.

- You, Y. et al. (2019). Large-batch training for LSTM and beyond.

- Warmup and use \(\eta \propto m\)

[W]e propose linear-epoch gradual-warmup approach in this paper. We call this approach Leg-Warmup (LEGW). LEGW enables a Sqrt Scaling scheme in practice and as a result we achieve much better performance than the previous Linear Scaling learning rate scheme. For the GNMT application (Seq2Seq) with LSTM, we are able to scale the batch size by a factor of 16 without losing accuracy and without tuning the hyper-parameters mentioned above.

- You, Y. et al. (2019). Large batch optimization for deep learning: Training BERT in 76 minutes.

- LARS and LAMB

- Zhang, G. et al. (2019). Which algorithmic choices matter at which batch sizes? Insights from a Noisy Quadratic Model.

Consistent with the empirical results of Shallue et al. (2018), each optimizer shows two distinct regimes: a small-batch (stochastic) regime with perfect linear scaling, and a large-batch (deterministic) regime insensitive to batch size. We call the phase transition between these regimes the critical batch size.

- Li, Y., Wei, C., & Ma, T. (2019). Towards explaining the regularization effect of initial large learning rate in training neural networks.

Our analysis reveals that more SGD noise, or larger learning rate, biases the model towards learning “generalizing” kernels rather than “memorizing” kernels.

Kaplan, J. et al. (2020). Scaling laws for neural language models.

Jastrzebski, S. et al. (2020). The break-even point on optimization trajectories of deep neural networks.

Blogs:

- Shen, K. (2018). Effect of batch size on training dynamics.

- Chang, D. (2020). Effect of batch size on neural net training.

3.13.6 Normalization

- BatchNorm

- LayerNorm, GroupNorm

- Online Normalization: Chiley, V. et al. (2019). Online normalization for training neural networks. 233

- Kiani, B., Balestriero, R., LeCun, Y., & Lloyd, S. (2022). projUNN: efficient method for training deep networks with unitary matrices. 234

- Huang, L. et al. (2020). Normalization techniques in training DNNs: Methodology, analysis and application. 235

3.13.7 Finetuning

- Hu, E.J. et al (2021). LoRA: Low-rank adaptation of large language models. 236

- Dettmers, T., Pagnoni, A., Holtzman, A., & Zettlemoyer, L. (2023). QLoRA: Efficient finetuning of quantized LLMs. 237

- Zhao, J. et al (2024). GaLore: Memory-efficient LLM training by gradient low-rank projection. 238

- Huh, M. et al. (2024). Training neural networks from scratch with parallel low-rank adapters. 239

3.13.8 Computer vision

- Computer Vision (CV)

- Fukushima: neocognitron 240

- LeNet-5

- LeCun, Y. (1989). Generalization and network design strategies.

- LeCun: OCR with backpropagation 241

- LeCun: LeNet-5 242

- Ciresan: MCDNN 243

- Krizhevsky, Sutskever, and Hinton: AlexNet 244

- VGG 245

- ResNet 246

- ResNet is performing a forward Euler discretisation of the ODE: \(\dot{x} = \sigma(F(x))\). 247

- MobileNet 248

- Neural ODEs 249

- EfficientNet 250

- VisionTransformer 251

- EfficientNetV2 252

- gMLP 253

- Dhariwal, P. & Nichol, A. (2021). Diffusion models beat GANs on image synthesis. 254

- Liu, Y. et al. (2021). A survey of visual transformers. 255

- Ingrosso, A. & Goldt, S. (2022). Data-driven emergence of convolutional structure in neural networks. 256

- Park, N. & Kim, S. (2022). How do vision transformers work? 257

- Zhao, Y. et al. (2023). DETRs beat YOLOs on real-time object detection. 258

- Nakkiran, P., Bradley, A., Zhou, H. & Advani, M. (2024). Step-by-step diffusion: An elementary tutorial. 259

240 Fukushima & Miyake (1982).

241 LeCun, Y. et al. (1989).

242 LeCun, Bottou, Bengio, & Haffner (1998).

243 Ciresan, Meier, Masci, & Schmidhuber (2012).

244 Krizhevsky, Sutskever, & Hinton (2012).

245 Simonyan & Zisserman (2014).

246 He, Zhang, Ren, & Sun (2015).

248 Howard, A.G. et al. (2017).

249 R. T. Q. Chen, Rubanova, Bettencourt, & Duvenaud (2018).

250 Tan & Le (2019).

251 Dosovitskiy, A. et al. (2020).

252 Tan & Le (2021).

253 H. Liu, Dai, So, & Le (2021).

254 Dhariwal & Nichol (2021).

255 Liu, Y. et al. (2021).

256 Ingrosso & Goldt (2022).

257 Park & Kim (2022).

258 Zhao, Y. et al. (2023).

259 Nakkiran, Bradley, Zhou, & Advani (2024).

Resources:

- Neptune.ai. (2021). Object detection algorithms and libraries.

- facebookresearch/vissl

- PyTorch Geometric (PyG)

3.13.9 Natural language processing

3.13.9.1 Introduction

- Natural Language Processing (NLP)

- History

- Firth, J.R. (1957): “You shall know a word by the company it keeps.” 260

- Nirenburg, S. (1996). Bar Hillel and Machine Translation: Then and Now. 261

- Hutchins, J. (2000). Yehoshua Bar-Hillel: A philosophers’ contribution to machine translation. 262

- Textbooks

- Jurafsky, D. & Martin, J.H. (2022). Speech and Language Processing: An introduction to natural language processing, computational linguistics, and speech recognition. 263

- Liu, Z., Lin, Y., & Sun, M. (2023). Representation Learning for Natural Language Processing. 264

3.13.9.2 word2vec

- Mikolov 265

- Julia Bazińska

- Olah, C. (2014). Deep learning, NLP, and representations.

- Alammar, J. (2019). The illustrated word2vec.

- Migdal, P. (2017). king - man + woman is queen; but why?

- Kun, J. (2018). A Programmer’s Introduction to Mathematics.

- Word vectors have semantic linear structure 266

- Ethayarajh, K. (2019). Word embedding analogies: Understanding King - Man + Woman = Queen.

- Allen, C. (2019). “Analogies Explained” … Explained.

3.13.9.3 RNNs

- RNNs and LSTMs

- Hochreiter, S. & Schmidhuber, J. (1997). Long short-term memory. 267

- Graves, A. (2013). Generating sequences with recurrent neural networks. 268

- Auto-regressive language modeling

- Olah, C. (2015). Understanding LSTM networks.

- Karpathy, A. (2015). The unreasonable effectiveness of recurrent neural networks.

Chain rule of language modeling (chain rule of probability):

\[ P(x_1, \ldots, x_T) = P(x_1, \ldots, x_{n-1}) \prod_{t=n}^{T} P(x_t | x_1 \ldots x_{t-1}) \]

or for the whole sequence:

\[ P(x_1, \ldots, x_T) = \prod_{t=1}^{T} P(x_t | x_1 \ldots x_{t-1}) \]

\[ = P(x_1) \: P(x_2 | x_1) \: P(x_3 | x_1 x_2) \: P(x_4 | x_1 x_2 x_3) \ldots \]

A language model (LM), predicts the next token given previous context. The output of the model is a vector of logits, which is given to a softmax to convert to probabilities for the next token.

\[ P(x_t | x_1 \ldots x_{t-1}) = \mathrm{softmax}\left( \mathrm{model}(x_1 \ldots x_{t-1}) \right) \]

Auto-regressive inference follows this chain rule. If done with greedy search:

\[ \hat{x}_t = \underset{x_t \in V}{\mathrm{argmax}} \: P(x_t | x_1 \ldots x_{t-1}) \]

Beam search:

- Beam search as used in NLP is described in Sutskever. 269

- Zhang, W. (1998). Complete anytime beam search. 270

- Zhou, R. & Hansen, E. A. (2005). Beam-stack search: Integrating backtracking with beam search. 271

- Collobert, R., Hannun, A., & Synnaeve, G. (2019). A fully differentiable beam search decoder. 272

269 Sutskever, Vinyals, & Le (2014), p. 4.

270 Zhang (1998).

271 Zhou & Hansen (2005).

272 Collobert, Hannun, & Synnaeve (2019).

Backpropagation through time (BPTT):

- Werbos, P.J. (1990). Backpropagation through time: what it does and how to do it. 273

273 Werbos (1990).

Neural Machine Translation (NMT):

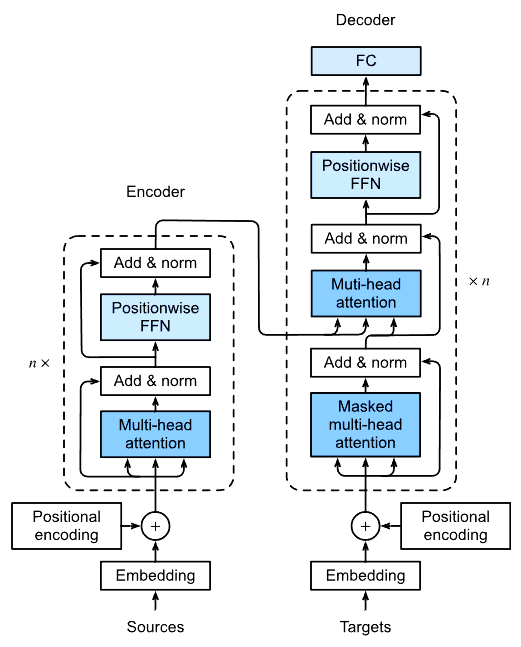

3.13.9.4 Transformers

\[ \mathrm{attention}(Q, K, V) = \mathrm{softmax}\left(\frac{Q\, K^\intercal}{\sqrt{d_k}}\right) V \]

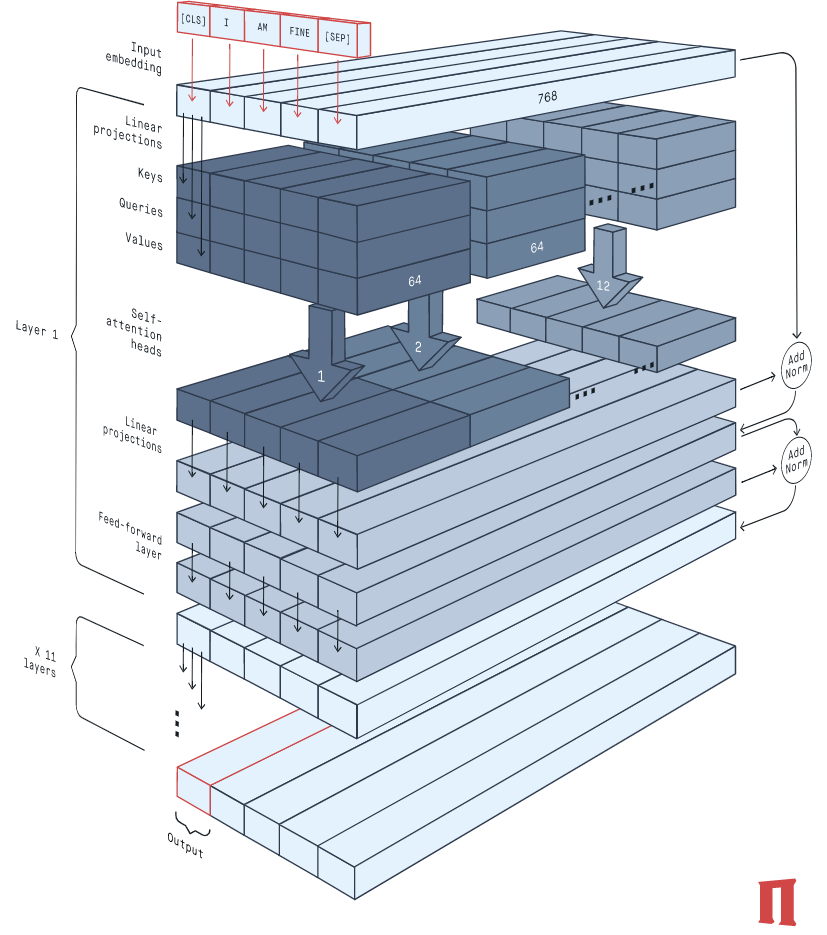

3.13.9.4.1 Attention and Transformers

- Transformer 278

- BERT 279

- Alammar, J. (2018). The illustrated BERT.

- Horev, R. (2018). BERT Explained: State of the art language model for NLP.

- Liu, Y. et al. (2019). RoBERTa: A robustly optimized BERT pretraining approach. 280

- Raffel, C. et al. (2019). Exploring the limits of transfer learning with a unified text-to-text transformer. (T5 model) 281

- Horan, C. (2021). 10 things you need to know about BERT and the transformer architecture that are reshaping the AI landscape.

- Video: What are transformer neural networks?

- Video: How to get meaning from text with language model BERT.

- ALBERT 282

- BART 283

- GPT-1 284, 2 285, 3 286

- Alammar, J. (2019). The illustrated GPT-2.

- Yang, Z. et al. (2019). XLNet: Generalized autoregressive pretraining for language understanding. 287

- Daily Nous: Philosophers On GPT-3.

- DeepMind’s blog posts for more details: AlphaFold1, AlphaFold2 (2020). Slides from the CASP14 conference are publicly available here.

- Joshi, C. (2020). Transformers are GNNs.

- Lakshmanamoorthy, R. (2020). A complete learning path to transformers (with guide to 23 architectures).

- Zaheer, M. et al. (2020). Big Bird: Transformers for longer sequences. 288

- Edelman, B.L., Goel, S., Kakade, S., & Zhang, C. (2021). Inductive biases and variable creation in self-attention mechanisms. 289

- Tay, Y., Dehghani, M., Bahri, D., & Metzler, D. (2022). Efficient transformers: A survey. 290

- Phuong, M. & Hutter, M. (2022). Formal algorithms for transformers. 291

- Chowdhery, A. et al. (2022). PaLM: Scaling language modeling with pathways. 292

- Ouyang, L. et al. (2022). Training language models to follow instructions with human feedback. (InstructGPT) 293

- OpenAI. (2022). Blog: ChatGPT: Optimizing Language Models for Dialogue.

- Wolfram, S. (2023). What is ChatGPT doing—and why does it work? 294

- GPT-4 295

- Mohamadi, S., Mujtaba, G., Le, N., Doretto, G., & Adjeroh, D.A. (2023). ChatGPT in the age of generative AI and large language models: A concise survey. 296

- Zhao, W.X. et al. (2023). A survey of large language models. 297

- 3Blue1Brown. (2024). Video: But what is a GPT? Visual intro to Transformers.

- Golovneva, O., Wang, T., Weston, J., & Sukhbaatar, S. (2024). Contextual position encoding: Learning to count what’s important. 298

- Apple. (2024). Apple Intelligence Foundation Language Models.

278 Vaswani, A. et al. (2017).

279 Devlin, Chang, Lee, & Toutanova (2018).

280 Liu, Y. et al. (2019).

281 Raffel, C. et al. (2019).

282 Lan, Z. et al. (2019).

283 Lewis, M. et al. (2019).

284 Radford, Narasimhan, Salimans, & Sutskever (2018).

285 Radford, A. et al. (2019).

286 Brown, T.B. et al. (2020).

287 Yang, Z. et al. (2019).

288 Zaheer, M. et al. (2020).

289 Edelman, Goel, Kakade, & Zhang (2021).

290 Tay, Dehghani, Bahri, & Metzler (2022).

291 Phuong & Hutter (2022).

292 Chowdhery, A. et al. (2022).

293 Ouyang, L. et al. (2022).

294 Wolfram (2023).

295 OpenAI (2023).

296 Mohamadi, S. et al. (2023).

297 Zhao, W.X. et al. (2023).

298 Golovneva, Wang, Weston, & Sukhbaatar (2024).

3.13.9.4.2 Computational complexity of transformers

- kipply (2022). Transformer inference arithmetic.

- Bahdanau, D. (2022). The FLOPs calculus of language model training.

- Sanger, A. (2023). Inference characteristics of Llama-2.

- Shenoy, V. & Kiely, P. (2023). A guide to LLM inference and performance.

- Anthony, Q., Biderman, S., & Schoelkopf, H. (2023). Transformer math 101.

3.13.9.4.3 Efficient transformers

- Dao, T., Fu, D.Y., Ermon, S., Rudra, A., & Ré, C. (2022). FlashAttention: Fast and memory-efficient exact attention with IO-awareness. 299

- Pope, R. et al. (2022). Efficiently scaling transformer inference.

- Dao, T. (2023). FlashAttention-2: Faster attention with better parallelism and work partitioning.

- Kim, S. et al. (2023). Full stack optimization of transformer inference: A survey.

- PyTorch. (2023). Accelerating generative AI with PyTorch II: GPT, Fast.

- Nvidia. (2023). Mastering LLM techniques: Inference optimization.

- Weng, L. (2023). Large transformer model inference optimization.

- Kwon, W. et al. (2023). Efficient memory management for large language model serving with PagedAttention.

- Zhang, L. (2023). Dissecting the runtime performance of the training, fine-tuning, and inference of large language models.

- Dettmers, T. (2023). Which GPU(s) to Get for Deep Learning: My Experience and Advice for Using GPUs in Deep Learning.

- Fu, Y. (2023). Towards 100x speedup: Full stack transformer inference optimization.

- Fu, Y. (2024). Challenges in deploying long-context transformers: A theoretical peak performance analysis.

- Fu, Y. et al. (2024). Data engineering for scaling language models to 128K context.

- Kwon, W. et al. (2023). Efficient memory management for large language model serving with PagedAttention. (vLLM)

- Shah, J. et al. (2024). FlashAttention-3: Fast and accurate attention with asynchrony and low-precision.

299 Dao, T. et al. (2022).

3.13.9.4.4 What comes after Transformers?

- Gu, A., Goel, K., & Ré, C. (2021). Efficiently modeling long sequences with structured state spaces. 300

- Merrill, W. & Sabharwal, A. (2022). The parallelism tradeoff: Limitations of log-precision transformers. 301

- Bulatov, A., Kuratov, Y., & Burtsev, M.S. (2022). Recurrent memory transformer. 302

- Raffel, C. (2023). A new alchemy: Language model development as a subfield?.

- Bulatov, A., Kuratov, Y., & Burtsev, M.S. (2023). Scaling transformer to 1M tokens and beyond with RMT. 303

- Bertsch, A., Alon, U., Neubig, G., & Gormley, M.R. (2023). Unlimiformer: Long-range transformers with unlimited length input. 304

- Mialon, G. et al. (2023). Augmented Language Models: a Survey. 305

- Peng, B. et al. (2023). RWKV: Reinventing RNNs for the Transformer Era. 306

- Sun, Y. et al. (2023). Retentive network: A successor to transformer for large language models. 307

- Gu, A. & Dao, T. (2023). Mamba: Linear-time sequence modeling with selective state spaces. 308

- Wang, H. et al. (2023). BitNet: Scaling 1-bit transformers for large language models. 309

- Ma, S. et al. (2024). The era of 1-bit LLMs: All large language models are in 1.58 bits. 310

- Ma, X. et al. (2024). Megalodon: Efficient LLM pretraining and inference with unlimited context length. 311

- Bhargava, A., Witkowski, C., Shah, M., & Thomson, M. (2023). What’s the magic word? A control theory of LLM prompting. 312

- Sun, Y. et al. (2024). Learning to (learn at test time): RNNs with expressive hidden states.

- Dao, T. & Gu, A. (2024). Transformers are SSMs: Generalized models and efficient algorithms through structured state space duality. 313

- Banerjee, S., Agarwal, A., & Singla, S. (2024). LLMs will always hallucinate, and we need to live with this. 314

- Jingyu Liu et al. (2025). TiDAR: Think in diffusion, talk in autoregression. 315

300 Gu, Goel, & Ré (2021).

301 Merrill & Sabharwal (2022).

302 Bulatov, Kuratov, & Burtsev (2022).

303 Bulatov, Kuratov, & Burtsev (2023).

304 Bertsch, Alon, Neubig, & Gormley (2023).

305 Mialon, G. et al. (2023).

306 Peng, B. et al. (2023).

307 Sun, Y. et al. (2023).

308 Gu & Dao (2023).

309 Wang, H. et al. (2023).

310 Ma, S. et al. (2024).

311 Ma, X. et al. (2024).

312 Bhargava, Witkowski, Shah, & Thomson (2023).

313 Dao & Gu (2024).

314 Banerjee, Agarwal, & Singla (2024).

315 Jingyu Liu et al (2025).

3.13.9.5 Evaluation methods

- Hendrycks, D. et al. (2020). Measuring Massive Multitask Language Understanding. (MMLU)

- Yue, X. et al. (2023). MMMU: A Massive Multi-discipline Multimodal Understanding and reasoning benchmark for expert AGI.

- Kim, J. et al. (2024). Evalverse: Unified and accessible library for large language model evaluation.

- Biderman, S. (2024). Lessons from the trenches on reproducible evaluation of language models.

- Stanford’s HELM Leaderboards

- Liang, P. et al. (2022). Holistic Evaluation of Language Models (HELM)

- Lee, T. et al. (2023). Holistic Evaluation of Text-To-Image Models (HEIM)

- github.com/stanford-crfm/helm

- EleutherAI/lm-evaluation-harness

- Srivastava, A. (2022). Beyond the Imitation Game: Quantifying and extrapolating the capabilities of language models.

- Suzgun, M. (2022). Challenging BIG-Bench Tasks and Whether Chain-of-Thought Can Solve Them.

- Wang, Y. (2024). MMLU-Pro: A More Robust and Challenging Multi-Task Language Understanding Benchmark.

3.13.9.6 Scaling laws in NLP

- Hestness, J. et al. (2017). Deep learning scaling is predictable, empirically. 316

- Church, K.W. & Hestness, J. (2019). Rationalism and empiricism in artificial intellegence: A survey of 25 years of evaluation [in NLP]. 317

- Kaplan, J. et al. (2020). Scaling laws for neural language models. 318

- Rae, J.W. et al. (2022). Scaling language models: Methods, analysis & insights from training Gopher. 319

- Hoffmann, J. et al. (2022). Training compute-optimal large language models (Chinchilla). 320

- Caballero, E., Gupta, K., Rish, I., & Krueger, D. (2022). Broken neural scaling laws. 321

- Constantin, S. (2023). “Scaling Laws” for AI and some implications.

- Muennighoff, N. et al. (2023). Scaling data-constrained language models. 322

- Pandey, R. (2024). gzip predicts data-dependent scaling laws. 323

- Bach, F. (2024). Scaling laws of optimization. 324

- Finzi, M. et al. (2025). Compute-optimal LLMs provably generalize better with scale. 325

3.13.9.7 Language understanding

- NLU

- Mahowald, K. et al. (2023). Dissociating language and thought in large language models: a cognitive perspective. 326

- Kosinski, M. (2023). Theory of mind may have spontaneously emerged in large language models. 327

- Chitra, T. & Prior, H. (2023). Do language models possess knowledge (soundness)?

- Shani, C., Jurafsky, D., LeCun, Y., et Shwartz-Ziv, R. (2025). From tokens to thoughts: How LLMs and humans trade compression for meaning. [^Shani2025]

See also:

3.13.9.8 Interpretability

- Grandmother cell

- Watson, D. & Floridi, L. (2019). The explanation game: A formal framework for interpretable machine learning. 328

- Anthropic. (2021). A mathematical framework for transformer circuits.

- Anthropic. (2022). In-context learning and induction heads.

- Gurnee, W. et al. (2023). Finding neurons in a haystack: Case studies with sparse probing. 329

- Meng, K., Bau, D., Andonian, A., & Belinkov, Y. (2023). Locating and editing factual associations in GPT. 330

- McDougall, C., Conmy, A., Rushing, C., McGrath, T., & Nanda, N. (2023). Copy suppression: Comprehensively understanding an attention head. 331

- Anthropic. (2025). On the biology of a large language model.

328 Watson & Floridi (2019).

329 Gurnee, W. et al. (2023).

330 Meng, Bau, Andonian, & Belinkov (2023).

331 McDougall, C. et al. (2023).

Linear probes:

- Alain, G. & Bengio, Y. (2016). Understanding intermediate layers using linear classifier probes. 332

- Belinkov, Y. (2022). Probing classifiers: Promises, shortcomings, and advances. 333

- Gurnee, W. & Tegmark, M. (2023). Language models represent space and time. 334

3.13.10 Reinforcement learning

- Reinforcement Learning (RL)

- Dynamic programming

- Bellman equation

- Backward induction

- John von Neumann & Oskar Morgenstern. (1944). Theory of Games and Economic Behavior.

Pedagogy:

- Sutton & Barto 335

- Deep Reinforcement Learning: A Brief Survey 336

- Cesa-Bianchi, N. & Lugosi, G. (2006). Prediction, Learning, and Games. 337

- OpenAI. (2018). Spinning Up.

335 Sutton & Barto (2018).

336 Arulkumaran, Deisenroth, Brundage, & Bharath (2017).

337 Cesa-Bianchi & Lugosi (2006).

Tutorials:

- RL course by David Silver

- RL course by Emma Brunskill

- DeepMind Reinforcement Learning Lecture Series 2021

More:

- List by OpenAI of key RL papers

- List of game AI codes by DATA Lab

- Xu, Z., van Hasselt, H., & Silver, D. (2018). Meta-gradient reinforcement learning. 338

- Chen, L. et al. (2021). Decision Transformer: Reinforcement learning via sequence modeling.

- Silver, D., Singh, S., Precup, D., & Sutton, R.S. (2024). Reward is enough. 339

- Javed, K. & Sutton, R.S. (2024). The big world hypothesis and its ramifications for artificial intelligence. 340

338 Xu, Hasselt, & Silver (2018).

339 Silver, Singh, Precup, & Sutton (2024).

340 Javed & Sutton (2024).

See also:

3.13.10.1 Q-learning

- Q-learning and DQN

- Uses the Markov Decision Process (MDP) framework